Python Data Engineering: Comprehensive Workflow for Data Modeling, Analytics with DuckDB

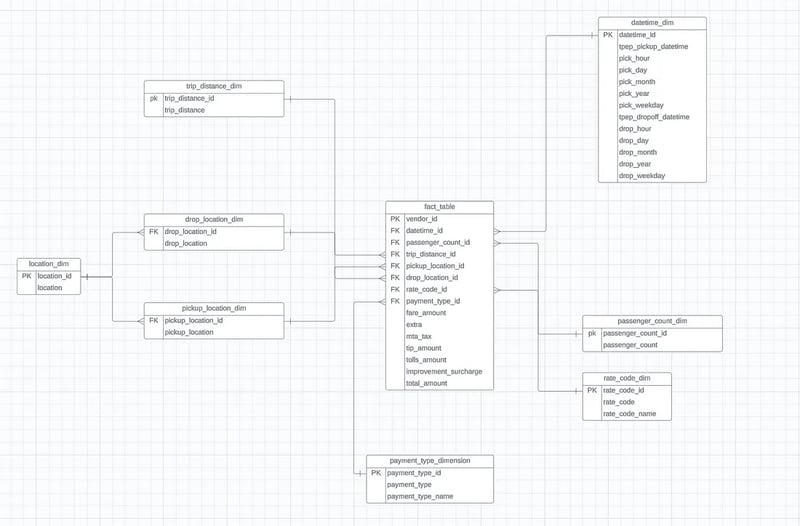

Overview Our primary goal is to convert the raw dataset into structured Dimension and Fact tables, allowing for efficient analysis and modelling. This process involves data cleaning and creating specific dimension tables covering attributes like date-time, passenger count, trip distance, payment types, and more. We will touch the fundamentals of data modelling, granularity and basic data engineering terminologies in simple human friendly terms. Additionally, we’ll explore automation using Python libraries, such as pandas, DuckDB and highlight the advantages of the Parquet file format, and perform analytical queries to derive meaningful insights. Prerequisites Python 3 Pandas DuckDB Jupyter Notebook (optional) Vs Code — Vs code provides notebook support out of the box, if open a file with extension of .ipynb it will open it in notebook like interface Introduction to the Data The provided dataset contains detailed records of taxi trips from the year 2023 trips. It captures pivotal information such as pick-up and drop-off dates/times, trip distances, fare details, passenger counts, and more. Note: It’s important to note that this data was collected and supplied to the TLC by technology providers under authorised programs. Therefore, while being valuable, the accuracy and completeness of the dataset might not be guaranteed. Dataset Download Link: https://github.com/DanielOX/Data-Engineering-Workflow-DUCKDB Github Reository for the code used in article: https://github.com/DanielOX/Data-Engineering-Workflow-DUCKDB Data Dictionary / Data Catalog A data dictionary or data catalog is like a detailed map or directory that contains descriptions and explanations of all the datasets and their elements (like tables, columns, or fields) Below image contains all the data points we will getting and their descriptions from the dataset. Process Flow of the Pipeline 1 — Loading and Data Preprocessing The analysis starts with loading the data into a Pandas DataFrame within the Jupyter Notebook environment. This initial step involves crucial data preprocessing tasks such as: Checking data types and ensuring appropriate formats for date-time columns. Removing duplicate entries to maintain data integrity. Generating a unique Trip ID for each trip record for identification purposes. (also known as surrogate key). 2 — Constructing Dimension Tables Once, the data is fully cleaned. We creates dimension tables for different entities Dimension Name Description datetime_dim Captures datetime-related attributes such as pickup and drop-off hours, days, months, years, and weekdays. passenger_count_dim Contains information about passenger count. trip_distance_dim Holds details about trip distances. rate_code_dim Provides insights into different rate codes and their corresponding names. location_dim A lookup table for locations that maps locationID for pickup/dropoff to location_name. pickup_location_dim Stores data about pickup locations. dropoff_location_dim Holds data regarding drop-off locations. payment_type_dim Contains information about various payment types. 3 — Building the Fact Table The Fact table, the heart of a dimensional model, is constructed by merging various Dimension tables. It encapsulates essential information such as trip details, fare amounts, payment details, dropoff_location_dim, pickup_location_dim, passenger details and more. It encapsulates essential information such as trip details, fare amounts, payment details, dropoff_location_dim, pickup_location_dim, passenger details and more. Diagram of Data Model 4 — Analytics using Our Data Model We will write some analytical queries using duckdb to query data from our data model. This will present and help us identify different usecases. Implementation Installations In order to proceed, We will need to install some python dependencies. Code import necessary packages in python import pandas as pd import duckdb #load data from `parquet` file df =pd.read_parquet('https://github.com/DanielOX/Data-Engineering-Workflow-DUCKDB/raw/main/nyc_taxi_trip_data/2023/2023.parquet') # display dataframe display(df.head()) # meta info on dataframe - df.shape outputs (rows, columns). print(f'Dataset Contains: {df.shape[0]} rows, {df.shape[1]} columns') outputs: Some details about parquet file format CSV files store data in rows with values separated by delimiters, while JSON uses key-value pairs for structured data. Parquet, however, arranges data by columns, allowing more efficient storage and retrieval of column-specific information. It employs advanced compression techniques and encoding methods, like Run-Length and Dictionary Encoding, resulting in smaller file sizes and faster query performance. This optimized structure makes Parquet well-suited for analytical workloads, big data processing,

Overview

Our primary goal is to convert the raw dataset into structured Dimension and Fact tables, allowing for efficient analysis and modelling. This process involves data cleaning and creating specific dimension tables covering attributes like date-time, passenger count, trip distance, payment types, and more.

We will touch the fundamentals of data modelling, granularity and basic data engineering terminologies in simple human friendly terms.

Additionally, we’ll explore automation using Python libraries, such as pandas, DuckDB and highlight the advantages of the Parquet file format, and perform analytical queries to derive meaningful insights.

Prerequisites

- Python 3

- Pandas

- DuckDB

- Jupyter Notebook (optional)

- Vs Code

- — Vs code provides notebook support out of the box, if open a file with extension of .ipynb it will open it in notebook like interface

Introduction to the Data

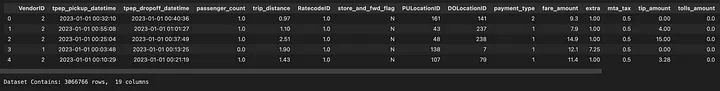

The provided dataset contains detailed records of taxi trips from the year 2023 trips. It captures pivotal information such as pick-up and drop-off dates/times, trip distances, fare details, passenger counts, and more.

Note:

It’s important to note that this data was collected and supplied to the TLC by technology providers under authorised programs. Therefore, while being valuable, the accuracy and completeness of the dataset might not be guaranteed.

Dataset Download Link:

https://github.com/DanielOX/Data-Engineering-Workflow-DUCKDB

Github Reository for the code used in article:

https://github.com/DanielOX/Data-Engineering-Workflow-DUCKDB

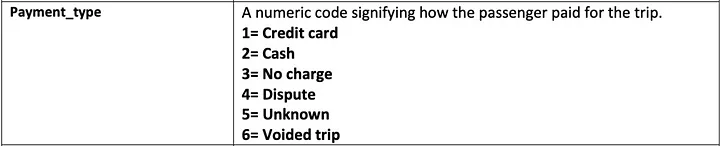

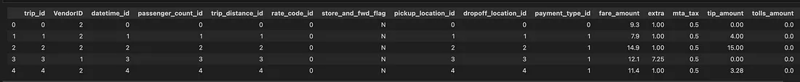

Data Dictionary / Data Catalog

A data dictionary or data catalog is like a detailed map or directory that contains descriptions and explanations of all the datasets and their elements (like tables, columns, or fields)

Below image contains all the data points we will getting and their descriptions from the dataset.

Process Flow of the Pipeline

1 — Loading and Data Preprocessing

The analysis starts with loading the data into a Pandas DataFrame within the Jupyter Notebook environment. This initial step involves crucial data preprocessing tasks such as:

- Checking data types and ensuring appropriate formats for date-time columns.

- Removing duplicate entries to maintain data integrity.

- Generating a unique Trip ID for each trip record for identification purposes. (also known as surrogate key).

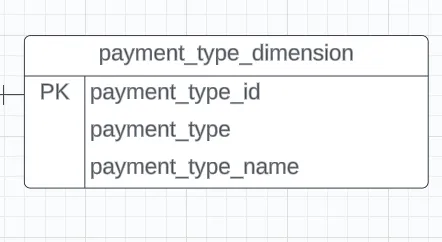

2 — Constructing Dimension Tables

Once, the data is fully cleaned. We creates dimension tables for different entities

| Dimension Name | Description |

|---|---|

| datetime_dim | Captures datetime-related attributes such as pickup and drop-off hours, days, months, years, and weekdays. |

| passenger_count_dim | Contains information about passenger count. |

| trip_distance_dim | Holds details about trip distances. |

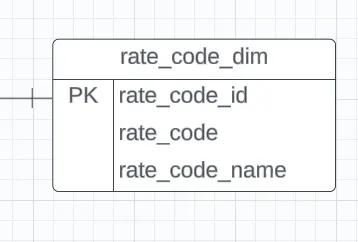

| rate_code_dim | Provides insights into different rate codes and their corresponding names. |

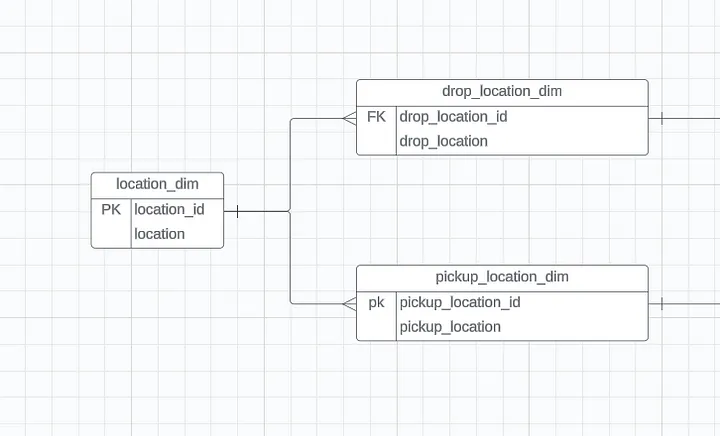

| location_dim | A lookup table for locations that maps locationID for pickup/dropoff to location_name. |

| pickup_location_dim | Stores data about pickup locations. |

| dropoff_location_dim | Holds data regarding drop-off locations. |

| payment_type_dim | Contains information about various payment types. |

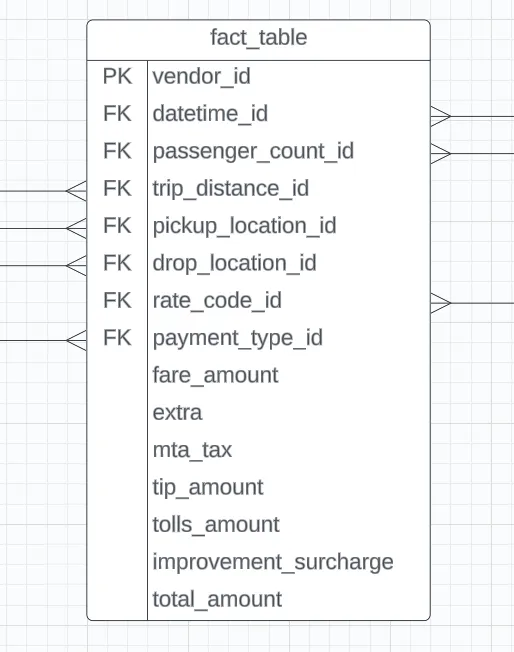

3 — Building the Fact Table

The Fact table, the heart of a dimensional model, is constructed by merging various Dimension tables. It encapsulates essential information such as trip details, fare amounts, payment details, dropoff_location_dim, pickup_location_dim, passenger details and more.

It encapsulates essential information such as trip details, fare amounts, payment details, dropoff_location_dim, pickup_location_dim, passenger details and more.

Diagram of Data Model

4 — Analytics using Our Data Model

We will write some analytical queries using duckdb to query data from our data model. This will present and help us identify different usecases.

Implementation

Installations

In order to proceed, We will need to install some python dependencies.

Code

import necessary packages in python

import pandas as pd

import duckdb

#load data from `parquet` file

df =pd.read_parquet('https://github.com/DanielOX/Data-Engineering-Workflow-DUCKDB/raw/main/nyc_taxi_trip_data/2023/2023.parquet')

# display dataframe

display(df.head())

# meta info on dataframe - df.shape outputs (rows, columns).

print(f'Dataset Contains: {df.shape[0]} rows, {df.shape[1]} columns')

outputs:

Some details about parquet file format

CSV files store data in rows with values separated by delimiters, while JSON uses key-value pairs for structured data.

Parquet, however, arranges data by columns, allowing more efficient storage and retrieval of column-specific information. It employs advanced compression techniques and encoding methods, like Run-Length and Dictionary Encoding, resulting in smaller file sizes and faster query performance.

This optimized structure makes Parquet well-suited for analytical workloads, big data processing, and data warehousing, as it significantly reduces storage needs and enhances processing speeds compared to CSV and JSON formats.

I will always prefer working with parquet format for my analytical projects, even if the source file csv/json, i will always try to convert it into parquet format.

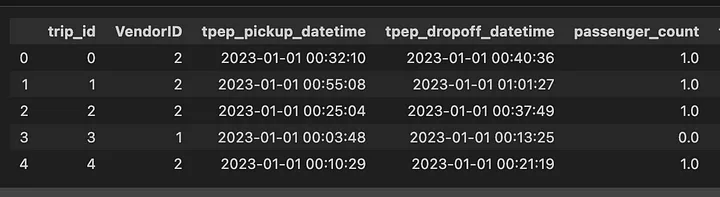

**Preprocessing Steps & Surrogate Key

We will perform a basic preprocessing step

- Drop duplicates

- Add a surrogate key

Each record in dataset represents a trip. We can apply complex hashing (md5, sha256) of concatenating multiple columns to represent a unique record for each trip or we can just assign the monotically increasing id. Both can work, i will be applying monotonically increasing id. Pandas provide this out of the box when we load in the dataframe. it is called index.

Just for the reference, if some of the folks wants to take hashing route. one can apply the logic as below. please note it’s gonna be a little slow.

# Will not be using in this article, code written here just for reference only

# Continue from the below code block

def genMd5Hash(*args) -> str:

string = ''.join([str(arg) for arg in args])

""" Function to generate md5 when given a string """

return hashlib.md5(string.encode())

df['trip_id'] = df.apply(genMd5Hash,axis=1)

# Just adding for sequence. the trip_id columns would be located at the end, so i will filtered out all the cols except `trip_id`

cols = list(filter(lambda x: x != 'trip_id', df.columns))

# combining array in this way ensures `trip_id` is always on the first columns, just for readability purposes

cols = ['trip_id'] + cols

df[cols].head()

# this will drop duplicates

df = df.drop_duplicates()

# It will insert `trip_id` to the first location in columns

df.insert(0,'trip_id',df.index)

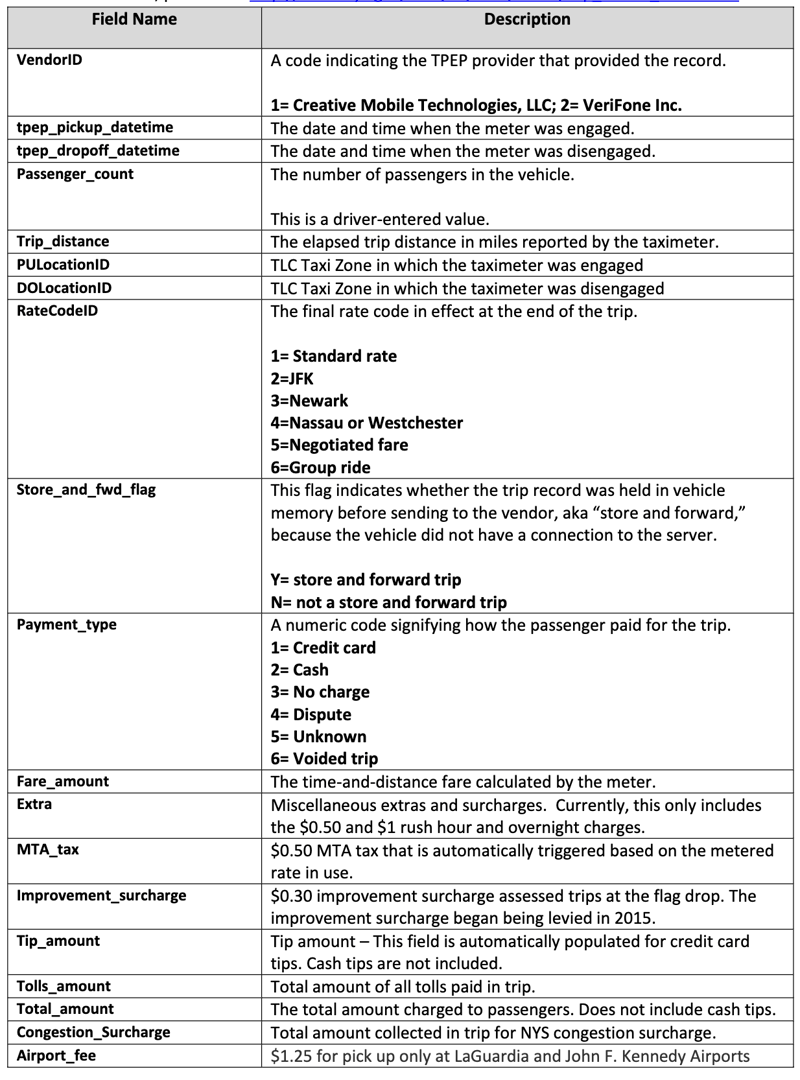

output:

As we can see trip_id column was added, which is nothing by a monotonically increasing id or index from data frame.

Data Modelling

Now preprocessing of the data is completed. The next step would be to create data model.

Some definitions of data model and dimnensions in a layman terms.

Definitions

Data Model

A data model is like a blueprint or a plan that defines how data is organized, structured, and related within a database or system.

Why do we need it?

It helps in understanding the different types of data, their relationships, and how they can be accessed or retrieved.

Analogy

Imagine you’re building a house. Before construction begins, you have architectural plans that detail where rooms will be located, how they connect, and what materials will be used. Similarly, a data model outlines how data will be stored, what types of data are needed, and how different pieces of data relate to each other.

Dimension

A dimension in a model refers to a way of organizing or categorizing data to help understand and analyze information more effectively. It’s like grouping or labeling data based on specific attributes or characteristics.

Why do we need it?

Dimensions provide context and structure to data, making it easier to study and gain insights. They help in organizing information in a meaningful way, allowing users to ask questions and perform analyses that can lead to better decision-making.

Analogy

In a sales model, time can be a dimension. You might break down time into years, months, and days to analyze sales performance over different time periods. Similarly, in a customer model, age or location can be dimensions to categorize customers based on their age groups or geographic regions.

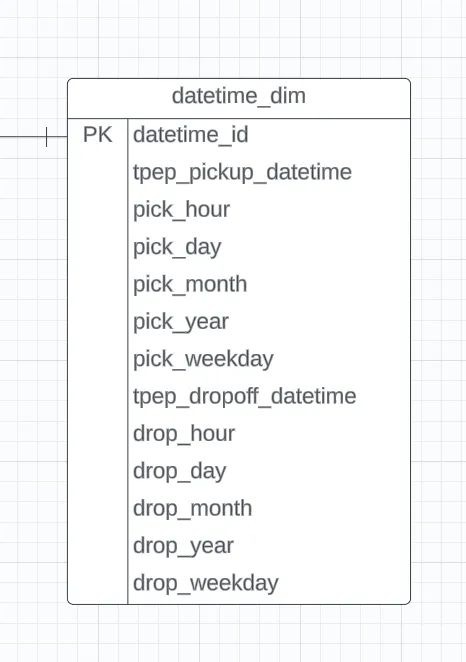

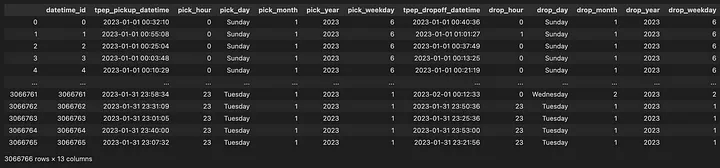

Creating datetime_dim dimension table

datetime_dim = df[['tpep_pickup_datetime','tpep_dropoff_datetime']].reset_index(drop=True)

# PK

datetime_dim['datetime_id'] = datetime_dim.index

# pickup datetime

datetime_dim['pick_hour'] = datetime_dim['tpep_pickup_datetime'].dt.hour

datetime_dim['pick_day'] = datetime_dim['tpep_pickup_datetime'].dt.day_name()

datetime_dim['pick_month'] = datetime_dim['tpep_pickup_datetime'].dt.month

datetime_dim['pick_year'] = datetime_dim['tpep_pickup_datetime'].dt.year

datetime_dim['pick_weekday'] = datetime_dim['tpep_pickup_datetime'].dt.weekday

# drop off datetime

datetime_dim['drop_hour'] = datetime_dim['tpep_dropoff_datetime'].dt.hour

datetime_dim['drop_day'] = datetime_dim['tpep_dropoff_datetime'].dt.day_name()

datetime_dim['drop_month'] = datetime_dim['tpep_dropoff_datetime'].dt.month

datetime_dim['drop_year'] = datetime_dim['tpep_dropoff_datetime'].dt.year

datetime_dim['drop_weekday'] = datetime_dim['tpep_dropoff_datetime'].dt.weekday

# giving proper ordering to columns for readability purpose

cols_seq = ['datetime_id', 'tpep_pickup_datetime', 'pick_hour', 'pick_day', 'pick_month', 'pick_year', 'pick_weekday',

'tpep_dropoff_datetime', 'drop_hour', 'drop_day', 'drop_month', 'drop_year', 'drop_weekday']

datetime_dim = datetime_dim[cols_seq]

display(datetime_dim)

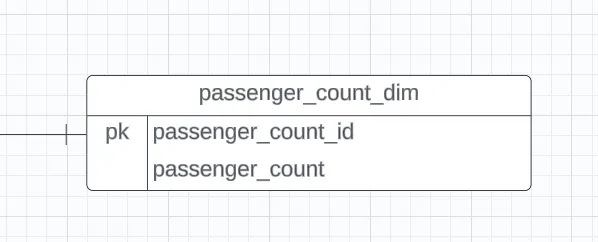

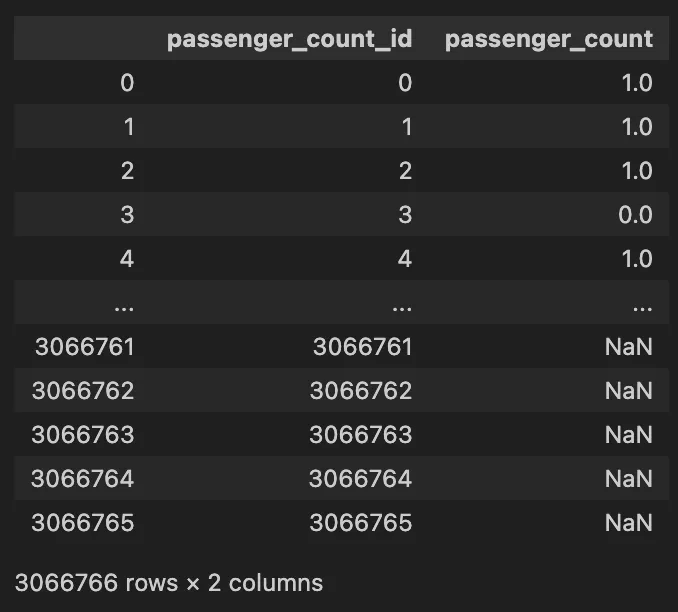

Creating passenger_count_dim dimension table

passenger count dim

passenger_count_dim = df[['passenger_count']].reset_index(drop=True)

passenger_count_dim['passenger_count_id'] = passenger_count_dim.index

# giving proper ordering to columns for readability purpose

cols_seq = ['passenger_count_id','passenger_count']

passenger_count_dim = passenger_count_dim[cols_seq]

display(passenger_count_dim)

output:

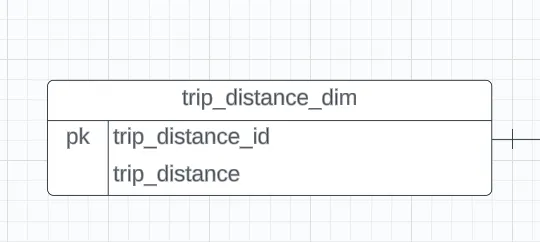

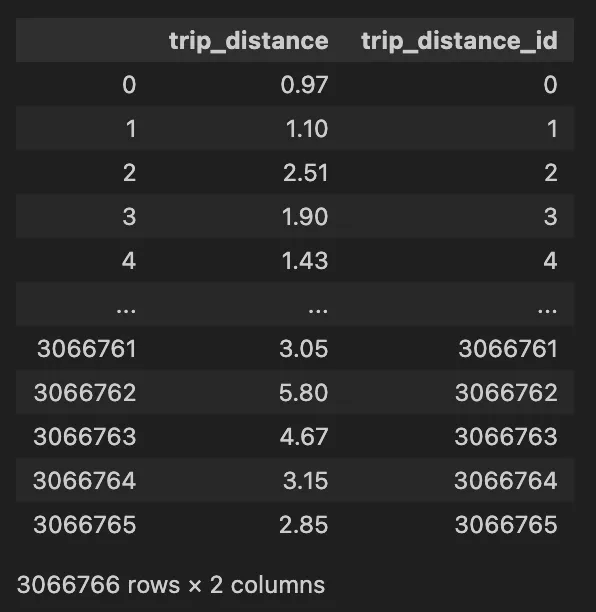

Creating trip_distance_dim dimension table

trip distance dimension

trip_distance_dim = df[['trip_distance']].reset_index(drop=True)

trip_distance_dim['trip_distance_id'] = trip_distance_dim.index

# giving proper ordering to columns for readability purpose

cols_seq = ['trip_distance_id', 'trip_distance']

trip_distance_dim = trip_distance_dim

display(trip_distance_dim)

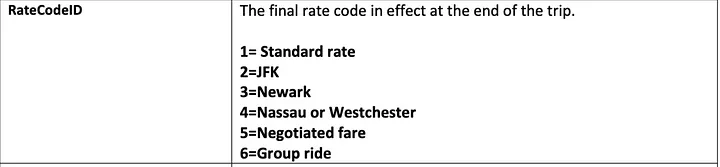

Creating rate_code_dim dimension table

if we look in the data dictionary the information about rate code id are provided e.g 1 = ‘Standard rate’ etc. We can add this meta info in our dimension table to make it more helpful for our analysts.

# Mapping of rate code

rate_code_type = {

1:"Standard rate",

2:"JFK",

3:"Newark",

4:"Nassau or Westchester",

5:"Negotiated fare",

6:"Group ride"

}

rate_code_dim = df[['RatecodeID']].drop_duplicates().reset_index(drop=True)

rate_code_dim['rate_code_id'] = rate_code_dim.index

# map `id` to the name of rate type

rate_code_dim['rate_code_name'] = rate_code_dim['RatecodeID'].map(rate_code_type)

# giving proper ordering to columns for readability purpose

cols_seq = ['rate_code_id','RatecodeID','rate_code_name']

rate_code_dim = rate_code_dim[cols_seq]

display(rate_code_dim)

Creating pickup_location_dim dimension table

We have PULocationID and DOLocationID column which doesn’t mean anything to us unless we have a location name. Therefore, we will be using a lookup table which has mappings for each of location_id and their zone/location. If we refer to the above diagram, the lookup table which i am referring to is as follows.

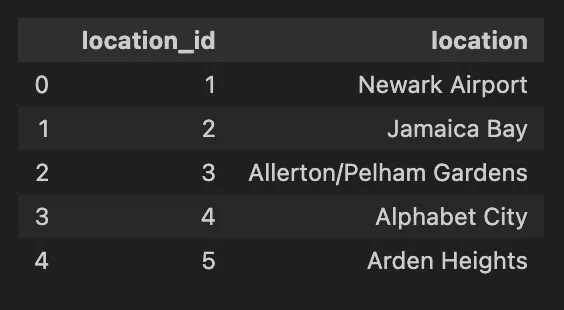

Creating location_dim dimension table

# loading lookup table

lookup_location = pd.read_csv('https://raw.githubusercontent.com/DanielOX/Data-Engineering-Workflow-DUCKDB/main/nyc_taxi_trip_data/taxi%2B_zone_lookup.csv')[['LocationID','Zone']].drop_duplicates().reset_index(drop=True)

location_dim = lookup_location.rename(columns={'LocationID':'location_id','Zone':'location'})

location_dim.head()

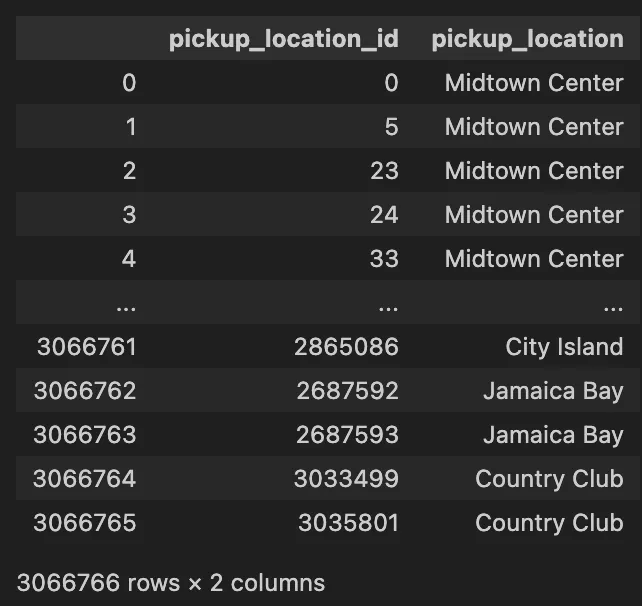

Creating pickup_location_dim dimension table

# preparing dimension table

pickup_location_dim = df[['PULocationID']].reset_index(drop=True)

pickup_location_dim['pickup_location_id'] = pickup_location_dim.index

cols_select = ['pickup_location_id','location']

# Join `pickup_location_dim` with `location_dim`

pickup_location_dim = pickup_location_dim.merge(location_dim, left_on='PULocationID', right_on='location_id')[cols_select]

# giving proper ordering to columns for readability purpose

cols_seq = ['pickup_location_id','location']

pickup_location_dim = pickup_location_dim[cols_seq]

#rename `location` to `pickup_location` for good readability

pickup_location_dim = pickup_location_dim.rename(columns={'location':'pickup_location'})

display(pickup_location_dim)

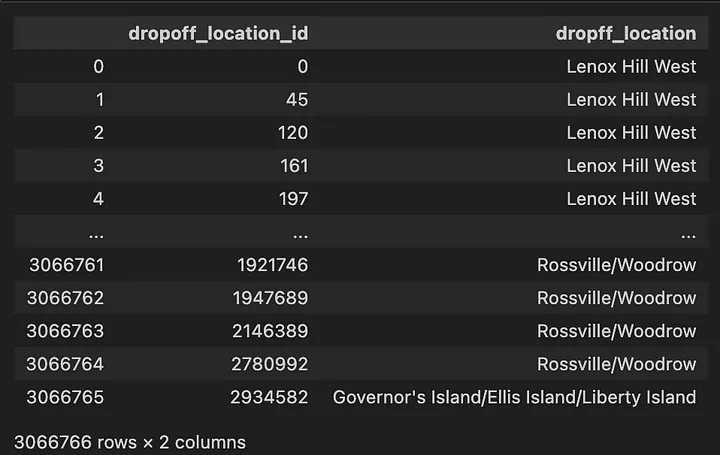

Creating dropoff_location_dim dimension table

# preparing dimension table

dropoff_location_dim = df[['DOLocationID']].reset_index(drop=True)

dropoff_location_dim['dropoff_location_id'] = dropoff_location_dim.index

cols_select = ['dropoff_location_id','location']

# Join `dropoff_location_dim` with `location_dim`

dropoff_location_dim = dropoff_location_dim.merge(location_dim, left_on='DOLocationID', right_on='location_id')[cols_select]

# giving proper ordering to columns for readability purpose

cols_seq = ['dropoff_location_id','location']

dropoff_location_dim = dropoff_location_dim[cols_seq]

#rename `location` to `pickup_location` for good readability

dropoff_location_dim = dropoff_location_dim.rename(columns={'location':'dropff_location'})

display(dropoff_location_dim)

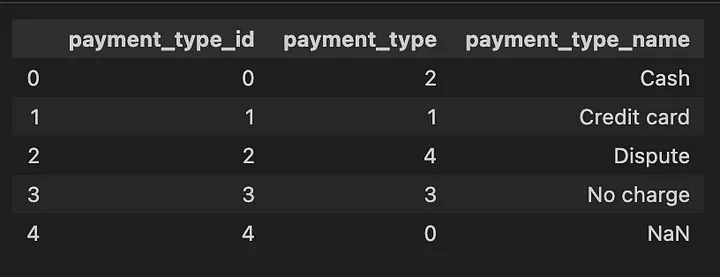

Creating payment_type_dim dimension table

if we look in the data dictionary the information about payment_type is provided e.g 1 = ‘Credit Card’ etc. This would be helpful for analysts when making complex join on data for analytical purposes

payment_type_name = {

1:"Credit card",

2:"Cash",

3:"No charge",

4:"Dispute",

5:"Unknown",

6:"Voided trip"

}

payment_type_dim = df[['payment_type']].drop_duplicates().reset_index(drop=True)

payment_type_dim['payment_type_id'] = payment_type_dim.index

payment_type_dim['payment_type_name'] = payment_type_dim['payment_type'].map(payment_type_name)

# giving proper ordering to columns for readability purpose

cols_seq = ['payment_type_id','payment_type','payment_type_name']

payment_type_dim = payment_type_dim[cols_seq]

display(payment_type_dim)

Creating fact_table dimension table

The most important table, where all of the data gets connected. This table doesn’t hold descriptive information about the items themselves or the customers — instead, it links to other tables (like dimensions) that contain this additional information

So, while the fact table holds the numeric facts or measurements, it references other tables to provide the context or details about those facts. think of a fact table as the central hub of a data warehouse or database. It’s where all the key metrics and measurements are stored, organized, and connected.

fact_table = df.merge(passenger_count_dim, left_on='trip_id', right_on='passenger_count_id', how='left') \

.merge(trip_distance_dim, left_on='trip_id', right_on='trip_distance_id', how='left') \

.merge(rate_code_dim, left_on='RatecodeID',right_on='RatecodeID',how='left') \

.merge(pickup_location_dim, left_on='trip_id', right_on='pickup_location_id',how='left') \

.merge(dropoff_location_dim, left_on='trip_id', right_on='dropoff_location_id',how='left') \

.merge(datetime_dim, left_on='trip_id', right_on='datetime_id',how='left')\

.merge(payment_type_dim, left_on='payment_type', right_on='payment_type',how='left')

cols_required = ['trip_id','VendorID', 'datetime_id', 'passenger_count_id',

'trip_distance_id', 'rate_code_id', 'store_and_fwd_flag', 'pickup_location_id', 'dropoff_location_id',

'payment_type_id', 'fare_amount', 'extra', 'mta_tax', 'tip_amount', 'tolls_amount',

'improvement_surcharge', 'total_amount']

fact_table = fact_table[cols_required]

display(fact_table.head())

as we can see the table contains foreign keys of the dimension table and it represent a consolidated table which can link different tables to get different information of the trips.

Applying Analytics

What good a data model is when we can’t apply analytics to it. I have prepared some questions in advance that we can answer. I will be using DuckDB for this exercise but in future articles (i hope :v ) i will be utilising click-house.

I will be creating a helper function for duckdb. a simple function when string of query is given, if will execute it and return dataframe

import duckdb

def run(q: str = ""):

""" A function takes SQL Query and executes it on dataframe"""

out = duckdb.query(q)

display(out)

return out

Note: In DuckDB we don’t have to explicitly insert data, the DuckDB engine treats the pandas dataframe as tables.

for example if you have a dataframe name

some_random_df_name = pd.read_csv(…)

in duckdb you can directly apply query on some_random_df_name variable. since, it is a dataframe object, it will treat it as a table. so,

select * from some_random_df_name;

is a completely valid statement

Type of Questions we will be answering using our data model

Basic Statistics:

- What is the average trip distance?

- What is the most common payment type?

- Average trip distance for trips with a tip_amount greater than $10, excluding trips with a payment type of ‘Cash’.

# What is the average trip distance?

q = """

SELECT round(avg(trip_distance),2) as average_distance

FROM trip_distance_dim

"""

run(q)

-- output

┌──────────────────┐

│ average_distance │

│ double │

├──────────────────┤

│ 3.85 │

└──────────────────┘

# What is the most common payment type?

TOP_K = 3

q = f"""

WITH PAYMENT_NAME_AGG_COUNTS AS (

SELECT payment_type, payment_type_name , count(*) as count_

FROM payment_type_dim

JOIN fact_table ON fact_table.payment_type_id = payment_type_dim.payment_type_id

GROUP BY payment_type_dim.payment_type, payment_type_dim.payment_type_name

), PAYMENT_RANK AS (

SELECT *, ROW_NUMBER() OVER(ORDER BY count_ DESC) as rnk

FROM PAYMENT_NAME_AGG_COUNTS

) SELECT payment_type_name, count_ FROM PAYMENT_RANK WHERE rnk <= {TOP_K}

"""

run(q)

-- output

┌───────────────────┬─────────┐

│ payment_type_name │ count_ │

│ varchar │ int64 │

├───────────────────┼─────────┤

│ Credit card │ 2411462 │

│ Cash │ 532241 │

│ NULL │ 71743 │

└───────────────────┴─────────┘

# - Average trip distance for trips with a tip_amount greater than $10, excluding trips with a payment type of 'Cash'.

q = """

WITH TRIP_DISTANCE_BASE AS (

SELECT round(avg(trip_distance_dim.trip_distance)) as 'average_trip_distance(miles)'

FROM fact_table

JOIN trip_distance_dim ON fact_table.trip_id = trip_distance_dim.trip_distance_id

JOIN payment_type_dim ON fact_table.payment_type_id = payment_type_dim.payment_type_id

WHERE fact_table.tip_amount > 10 and lower(payment_type_dim.payment_type_name) = 'cash'

) select * from TRIP_DISTANCE_BASE

"""

run(q)

-- output

┌──────────────────────────────┐

│ average_trip_distance(miles) │

│ double │

├──────────────────────────────┤

│ 15.0 │

└──────────────────────────────┘

Vendor Comparison:

- How many trips were made by each VendorID?

- Which vendor has the highest average fare_amount?

# How many trips were made by each VendorID?

q = """

SELECT VendorID, count(*) as total_trips

FROM fact_table

GROUP BY VendorID

ORDER BY count(*) DESC

"""

run(q)

-- output

┌──────────┬─────────────┐

│ VendorID │ total_trips │

│ int64 │ int64 │

├──────────┼─────────────┤

│ 2 │ 2239399 │

│ 1 │ 827367 │

└──────────┴─────────────┘

# Which vendor has the highest average fare_amount?

q = """

WITH VENDOR_FARE_AMOUNT AS (

SELECT VendorID, round(avg(fare_amount),2) as avg_fare_amount

FROM fact_table

GROUP BY VendorID

), VENDOR_MAX_AMOUNT_RNK AS (

SELECT VendorID, avg_fare_amount, row_number() OVER(ORDER BY avg_fare_amount DESC) as RNK

FROM VENDOR_FARE_AMOUNT

) SELECT VendorID, avg_fare_amount FROM VENDOR_MAX_AMOUNT_RNK WHERE RNK = 1

"""

run(q)

-- output

┌──────────┬─────────────────┐

│ VendorID │ avg_fare_amount │

│ int64 │ double │

├──────────┼─────────────────┤

│ 2 │ 18.71 │

└──────────┴─────────────────┘

Time Analysis:

- What is the average number of passengers for trips that occurred on Sundays?

- What are the peak hours for taxi trips based on the pick-up date-time?

#- What is the average number of passengers for trips that occurred on Sundays?

q = """

SELECT round(avg(passenger_count_dim.passenger_count),2) as average_passenger_count

FROM fact_table

JOIN passenger_count_dim ON passenger_count_dim.passenger_count_id = fact_table.passenger_count_id

JOIN datetime_dim ON datetime_dim.datetime_id = fact_table.datetime_id

WHERE lower(datetime_dim.pick_day) = 'sunday' or datetime_dim.drop_day = 'sunday'

"""

print("What is the average number of passengers for trips that occurred on Sundays?")

run(q)

-- output

┌─────────────────────────┐

│ average_passenger_count │

│ double │

├─────────────────────────┤

│ 1.45 │

└─────────────────────────┘

#- What are the peak hours for taxi trips based on the pick-up datetime?

print("What are the peak hours for taxi trips based on the pick-up datetime?")

q = """

WITH TRIPS_BY_HOUR_COUNT AS (

SELECT datetime_dim.pick_hour, count(*) as total_trips

FROM fact_table

JOIN datetime_dim ON datetime_dim.datetime_id = fact_table.datetime_id

GROUP BY datetime_dim.pick_hour

), TRIPS_BY_HOUR_RANK AS (

SELECT pick_hour, total_trips, DENSE_RANK() OVER(ORDER BY total_trips) as rnk

FROM TRIPS_BY_HOUR_COUNT

) SELECT pick_hour,total_trips FROM TRIPS_BY_HOUR_RANK WHERE rnk = 1

"""

run(q)

-- output

┌───────────┬─────────────┐

│ pick_hour │ total_trips │

│ int32 │ int64 │

├───────────┼─────────────┤

│ 4 │ 17835 │

└───────────┴─────────────┘

Location-Based Analysis:

- Identify zones with the highest average total_amount and the lowest average trip_distance.

- Which pickup location has the highest tip_amount amount?

# Identify zones with the highest average total_amount and the lowest average trip_distance

q = """

WITH BASE AS (

SELECT

pickup_location_dim.pickup_location as zone,

round(avg(total_amount),2) as avg_total_amount,

round(avg(trip_distance_dim.trip_distance_id),2) as avg_trip_distance

FROM fact_table

join trip_distance_dim ON fact_table.trip_id = trip_distance_dim.trip_distance_id

JOIN pickup_location_dim ON pickup_location_dim.pickup_location_id = fact_table.pickup_location_id

WHERE pickup_location_dim.pickup_location IS NOT NULL

GROUP BY pickup_location_dim.pickup_location

) SELECT zone, avg_total_amount as avg_highest_trip_amount, avg_trip_distance as avg_lowest_trip_distance

FROM BASE ORDER BY avg_total_amount DESC, avg_trip_distance ASC LIMIT 1

"""

run(q)

-- output

┌────────────────┬─────────────────────────┬──────────────────────────┐

│ zone │ avg_highest_trip_amount │ avg_lowest_trip_distance │

│ varchar │ double │ double │

├────────────────┼─────────────────────────┼──────────────────────────┤

│ Newark Airport │ 104.38 │ 1212392.68 │

└────────────────┴─────────────────────────┴──────────────────────────┘

# Which pickup location has the highest tip_amount amount?

q = """

WITH BASE AS(

SELECT

pickup_location_dim.pickup_location as zone,

fact_table.tip_amount,

ROW_NUMBER() OVER(ORDER BY fact_table.tip_amount DESC) as rnk

FROM fact_table

JOIN pickup_location_dim ON pickup_location_dim.pickup_location_id = fact_table.pickup_location_id

) SELECT zone, tip_amount as highest_tip_amount FROM BASE WHERE rnk = 1

"""

print("Which pickup location has the highest tip_amount amount?")

run(q)

-- output

┌───────────────────────┬────────────────────┐

│ zone │ highest_tip_amount │

│ varchar │ double │

├───────────────────────┼────────────────────┤

│ Upper East Side North │ 380.8 │

└───────────────────────┴────────────────────┘

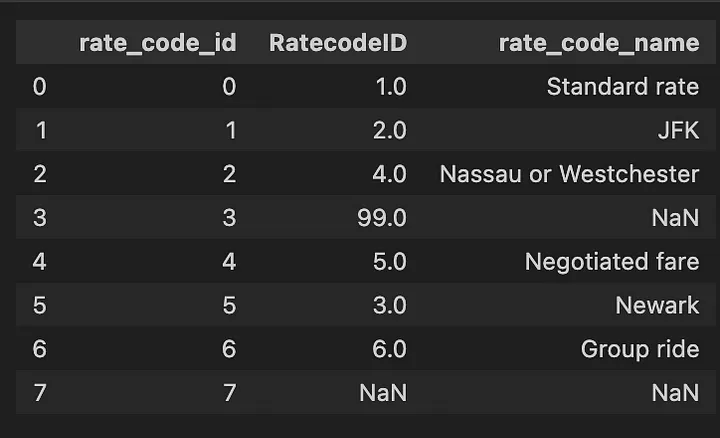

Rate Code Insights:

- How many trips were made for each rate code type?

- What is the average total_amount for each rate code type?

# How many trips were made for each rate code type?

q = """

SELECT rate_code_dim.rate_code_name, count(*) as trip_count

FROM fact_table

JOIN rate_code_dim ON rate_code_dim.RatecodeID = fact_table.rate_code_id

GROUP BY rate_code_dim.rate_code_name

ORDER BY trip_count DESC

"""

print("How many trips were made for each rate code type?")

run(q)

-- output

┌───────────────────────┬────────────┐

│ rate_code_name │ trip_count │

│ varchar │ int64 │

├───────────────────────┼────────────┤

│ Standard rate │ 114239 │

│ Nassau or Westchester │ 15043 │

│ Newark │ 13106 │

│ Negotiated fare │ 8958 │

│ JFK │ 4366 │

│ Group ride │ 6 │

└───────────────────────┴────────────┘

# What is the average total_amount for each rate code type?

q = """

SELECT rate_code_dim.rate_code_name, round(avg(fact_table.total_amount),2) as avg_total_amount

FROM fact_table

JOIN rate_code_dim ON rate_code_dim.RatecodeID = fact_table.rate_code_id

GROUP BY rate_code_dim.rate_code_name

ORDER BY avg_total_amount DESC

"""

print("What is the average total_amount for each rate code type?")

run(q)

-- output

┌───────────────────────┬──────────────────┐

│ rate_code_name │ avg_total_amount │

│ varchar │ double │

├───────────────────────┼──────────────────┤

│ Group ride │ 132.78 │

│ JFK │ 119.95 │

│ Negotiated fare │ 107.4 │

│ Standard rate │ 89.09 │

│ Nassau or Westchester │ 81.39 │

│ Newark │ 37.76 │

└───────────────────────┴──────────────────┘

Conclusion:

In conclusion, This transformation of raw data into organized structures empowers efficient analysis and modeling. By creating distinct dimension tables encompassing attributes like date-time, passenger count, trip distance, payment types, among others, we have established a strong foundation for our data model.

This model facilitates effective merging and querying of information, laying the groundwork for deeper insights. Moreover, exploring automation using Python libraries, understanding the advantages of the Parquet file format, and conducting analytical queries has equipped us to derive valuable and meaningful insights from this extensive dataset.

You can reach out to me directly in case of any questions:

Email: danial.shabbir77@gmail.com

Github: http://github.com/danielox

About me: http://danielox.github.io/

LinkedIn: http://linkedin.com/in/danial-shabbir/

Refrences:

https://duckdb.org/docs/

https://pandas.pydata.org/getting_started.html

https://www.python.org/

The ideas was inspired by Darshil Parmer Video

What's Your Reaction?