Stormforge and Karpenter With EKS

When it comes to proper resource optimization, you have to ask yourself four questions: Does my application stack have enough resources? Does my application stack have too many resources? Does my cluster have enough resources? Does my cluster have too many resources? This is the definition of scaling up and down. In this blog post, you’ll learn about two methods of ensuring proper resource optimization - Karpenter and Stormforge. Why Karpenter and Stormforge When it comes to scaling up and scaling down, there are a few things that occur. Your application stack asks for resources. Then, once it’s done with those resources, it (hopefully) gives the resource back and they get put into the pool. The resources are typically memory and CPU. However, before that can occur, the resources need to be available. That’s where resource optimization for Worker Nodes comes into play. For the resources to be available, the Worker Nodes must have enough CPU and memory to give out. If there aren’t enough Worker Nodes, a new one comes online. Then, if the extra Worker Node isn’t needed, it goes away. Scale up and scale down. Stormforge handles the first part (the application stack resources). Karpenter handles the second part (scaling up and down Worker Nodes). Together, you have proper resource optimization. Stormforge When you configure Stormforge, it’s a two-prong approach. There’s a GUI piece and an installation piece. First, go to the Stormforge website: https://www.stormforge.io/ Next, click the trial button as shown in the screenshot below. You’ll be prompted to enter an email address. Upon completion, you’ll receive a 30-day free trial. You can extend 15 days if you run out of time. Once you log into, you’ll see a screen similar to the one below where you can begin to add clusters. Click the green + Add Cluster button. The first screen will be to add a cluster. Type in your Kubernetes cluster name. Next, copy the values.yaml file that’s shown like in the screenshot below and save it. The last step is to install the agent and the agent will receive its values from the values.yaml file in the previous step. Verify the install. You should now see that your cluster exists. Stormforge now officially has access to ensuring that all resources are properly configured for your application stacks. Karpenter Configuration For Karpenter to work properly, you need three things: An IAM Role with the proper permissions. Karpenter running on the cluster. The Node Pool (Worker Node) configurations). For the purposes of this blog post, you’ll see how it’s done with EKS. Karpenter is also now available in beta for AKS, so the process is different. AWS IAM Role and Permissions The first step is to create a policy that allows Karpenter to scale up and scale down Worker Nodes. You’ll notice in the policy below that it’s primarily focused on EC2, which is where the Worker Nodes run. { "Statement": [ { "Action": [ "ssm:GetParameter", "iam:PassRole", "ec2:DescribeImages", "ec2:RunInstances", "ec2:DescribeSubnets", "ec2:DescribeSecurityGroups", "ec2:DescribeLaunchTemplates", "ec2:DescribeInstances", "ec2:DescribeInstanceTypes", "ec2:DescribeInstanceTypeOfferings", "ec2:DescribeAvailabilityZones", "ec2:DeleteLaunchTemplate", "ec2:CreateTags", "ec2:CreateLaunchTemplate", "ec2:CreateFleet", "ec2:DescribeSpotPriceHistory", "pricing:GetProducts" ], "Effect": "Allow", "Resource": "*", "Sid": "Karpenter" }, { "Action": "ec2:TerminateInstances", "Condition": { "StringLike": { "ec2:ResourceTag/Name": "*karpenter*" } }, "Effect": "Allow", "Resource": "*", "Sid": "ConditionalEC2Termination" } ], "Version": "2012-10-17" } Save the policy as policy.json. Next, use the policy above with the create-policy flag in the aws iam sub-command. aws iam create-policy --policy-name KarpenterControllerPolicy --policy-document file://policy.json The next step is to associate the OIDC provider that comes with EKS to IAM. Out of the box, even though it’s EKS running on AWS, it’s not associated with AWS IAM. A proper Service Account needs to be implemented within the EKS cluster. eksctl utils associate-iam-oidc-provider --cluster k8squickstart-cluster --approve Last but certainly not least, add the Service Account to the EKS cluster. The Service Account allows Karpenter to have the proper permissions for scaling up and down Worker Nodes. eksctl create iamserviceaccount \ --cluster "your_cluster_name" --name karpenter --namespace karpenter \ --role-name "KarpenterInstanceNodeRole" \ --attach-policy-arn "ar

When it comes to proper resource optimization, you have to ask yourself four questions:

- Does my application stack have enough resources?

- Does my application stack have too many resources?

- Does my cluster have enough resources?

- Does my cluster have too many resources?

This is the definition of scaling up and down.

In this blog post, you’ll learn about two methods of ensuring proper resource optimization - Karpenter and Stormforge.

Why Karpenter and Stormforge

When it comes to scaling up and scaling down, there are a few things that occur.

Your application stack asks for resources. Then, once it’s done with those resources, it (hopefully) gives the resource back and they get put into the pool. The resources are typically memory and CPU.

However, before that can occur, the resources need to be available. That’s where resource optimization for Worker Nodes comes into play. For the resources to be available, the Worker Nodes must have enough CPU and memory to give out. If there aren’t enough Worker Nodes, a new one comes online. Then, if the extra Worker Node isn’t needed, it goes away.

Scale up and scale down.

Stormforge handles the first part (the application stack resources).

Karpenter handles the second part (scaling up and down Worker Nodes).

Together, you have proper resource optimization.

Stormforge

When you configure Stormforge, it’s a two-prong approach. There’s a GUI piece and an installation piece.

First, go to the Stormforge website: https://www.stormforge.io/

Next, click the trial button as shown in the screenshot below.

You’ll be prompted to enter an email address.

Upon completion, you’ll receive a 30-day free trial. You can extend 15 days if you run out of time.

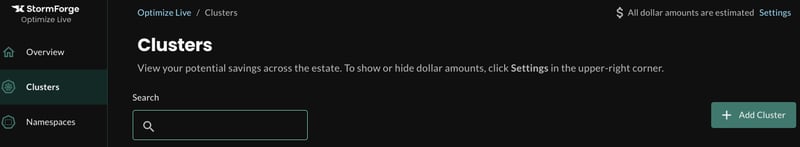

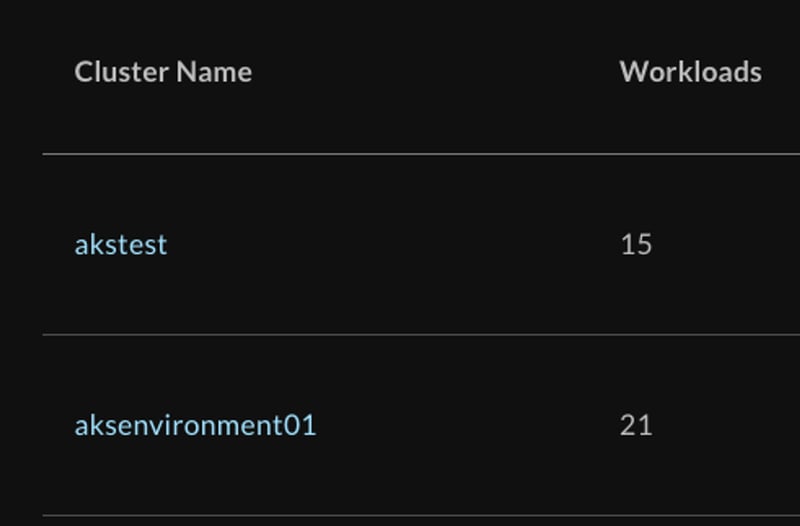

Once you log into, you’ll see a screen similar to the one below where you can begin to add clusters.

Click the green + Add Cluster button.

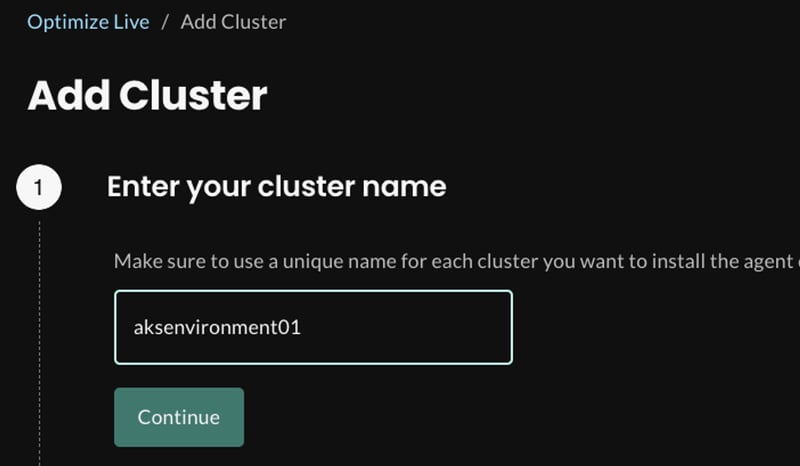

The first screen will be to add a cluster. Type in your Kubernetes cluster name.

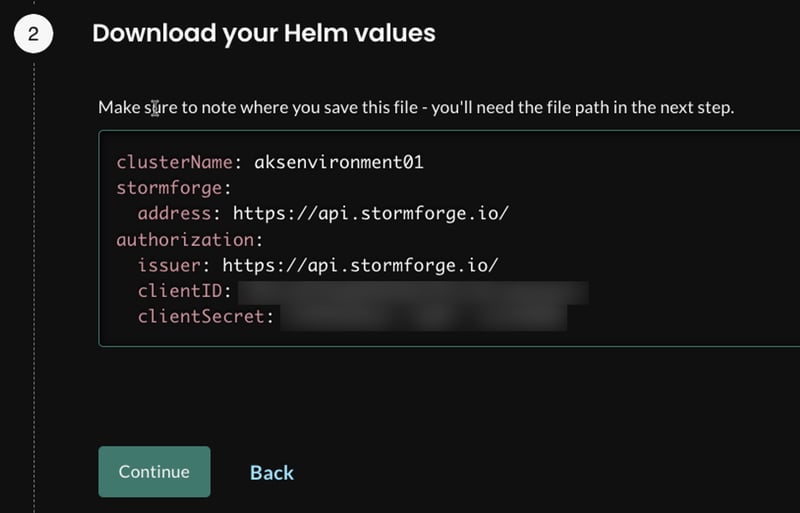

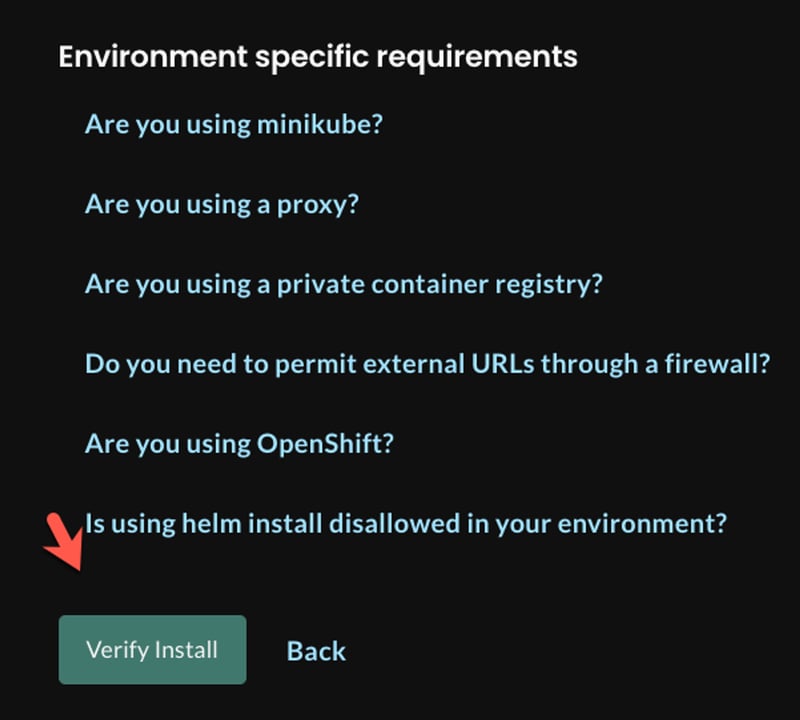

Next, copy the values.yaml file that’s shown like in the screenshot below and save it.

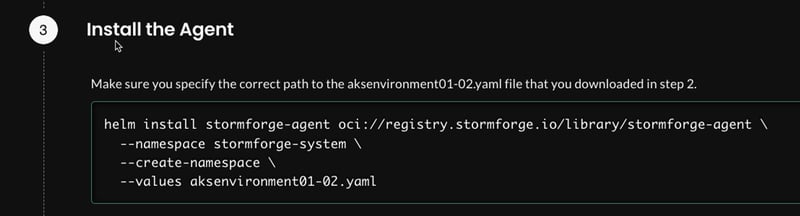

The last step is to install the agent and the agent will receive its values from the values.yaml file in the previous step.

Verify the install.

You should now see that your cluster exists.

Stormforge now officially has access to ensuring that all resources are properly configured for your application stacks.

Karpenter Configuration

For Karpenter to work properly, you need three things:

- An IAM Role with the proper permissions.

- Karpenter running on the cluster.

- The Node Pool (Worker Node) configurations).

For the purposes of this blog post, you’ll see how it’s done with EKS. Karpenter is also now available in beta for AKS, so the process is different.

AWS IAM Role and Permissions

The first step is to create a policy that allows Karpenter to scale up and scale down Worker Nodes. You’ll notice in the policy below that it’s primarily focused on EC2, which is where the Worker Nodes run.

{

"Statement": [

{

"Action": [

"ssm:GetParameter",

"iam:PassRole",

"ec2:DescribeImages",

"ec2:RunInstances",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeLaunchTemplates",

"ec2:DescribeInstances",

"ec2:DescribeInstanceTypes",

"ec2:DescribeInstanceTypeOfferings",

"ec2:DescribeAvailabilityZones",

"ec2:DeleteLaunchTemplate",

"ec2:CreateTags",

"ec2:CreateLaunchTemplate",

"ec2:CreateFleet",

"ec2:DescribeSpotPriceHistory",

"pricing:GetProducts"

],

"Effect": "Allow",

"Resource": "*",

"Sid": "Karpenter"

},

{

"Action": "ec2:TerminateInstances",

"Condition": {

"StringLike": {

"ec2:ResourceTag/Name": "*karpenter*"

}

},

"Effect": "Allow",

"Resource": "*",

"Sid": "ConditionalEC2Termination"

}

],

"Version": "2012-10-17"

}

Save the policy as policy.json.

Next, use the policy above with the create-policy flag in the aws iam sub-command.

aws iam create-policy --policy-name KarpenterControllerPolicy --policy-document file://policy.json

The next step is to associate the OIDC provider that comes with EKS to IAM. Out of the box, even though it’s EKS running on AWS, it’s not associated with AWS IAM. A proper Service Account needs to be implemented within the EKS cluster.

eksctl utils associate-iam-oidc-provider --cluster k8squickstart-cluster --approve

Last but certainly not least, add the Service Account to the EKS cluster. The Service Account allows Karpenter to have the proper permissions for scaling up and down Worker Nodes.

eksctl create iamserviceaccount \

--cluster "your_cluster_name" --name karpenter --namespace karpenter \

--role-name "KarpenterInstanceNodeRole" \

--attach-policy-arn "arn:aws:iam::912101370089:policy/KarpenterControllerPolicy" \

--role-only \

--approve

Installation and Configuration

Once the IAM Role and permissions are set, you can begin the installation and configuration process.

First, you’ll need to set some environment variables. These environment variables are what Karpenter will use to target the appropriate cluster for Worker Node management along with the AWS IAM Role it’ll use with the proper permissions to do so.

export CLUSTER_NAME="your_cluster_name"

export KARPENTER_IAM_ROLE_ARN=ARN_PATH/KarpenterInstanceNodeRole

export CLUSTER_ENDPOINT=cluster_endpoint

Karpenter can be (and should be) installed via Helm. Package Management for something like this is the way to go for managing upgrades and patching.

helm upgrade --install --namespace karpenter --create-namespace \

karpenter karpenter/karpenter \

--set serviceAccount.annotations."eks\.amazonaws\.com/role-arn"=${KARPENTER_IAM_ROLE_ARN} \

--set clusterName=${CLUSTER_NAME} \

--set clusterEndpoint=${CLUSTER_ENDPOINT} \

--set aws.defaultInstanceProfile=KarpenterNodeInstanceProfile-${CLUSTER_NAME}

The last step is to install the CRD’s. The CRD’s are what you’ll use for the Karpenter configuration via the Karpenter API.

kubectl apply -f https://raw.githubusercontent.com/aws/karpenter/main/pkg/apis/crds/karpenter.sh_nodepools.yaml

kubectl apply -f https://raw.githubusercontent.com/aws/karpenter/main/pkg/apis/crds/karpenter.sh_nodeclaims.yaml

kubectl apply -f https://raw.githubusercontent.com/aws/karpenter/main/pkg/apis/crds/karpenter.k8s.aws_ec2nodeclasses.yaml

Congrats! You have successfully installed Karpenter.

Wrapping Up

Ensuring that both your application stack and Worker Nodes have enough resources OR can relieve some resources is crucial for any environment. It’s the make-or-break between costs being incredibly high. It’s also the make or break between an application not running as expected and it running properly for customers.

What's Your Reaction?